Not by AI: a badge to say you're (mostly) human

Badges are cool, and sporting them on your clothing lets people know what's important to you - along with other details and observations you proudly or ironically advertise to the world.

It may be that you've recently been to a Kylie Minogue concert and bought the only official merch you could afford; maybe you feel the need to declare your love of dogs, or that you wish your life was narrated by Morgan Freeman; Perhaps it's your birthday - in which case, Happy Birthday!

Ever felt the need to declare that you're not a robot? We certainly haven't - our physical presence, general awkwardness, along with the complete lack of a shiny skeleton and Austrian accent indicate that we're genuine humans

In the online world, it's different.

On the internet, no-one knows you're a probability engine...

ChatGPT from OpenAI came onto the scene almost a year ago on November 30th 2022, and it's impossible to overstate how profoundly it altered the media landscape, work, and entire economies - as well as what you read and how you interact with your fellow humans.

Sure, we'd seen approximations of artificial intelligence in the wild before. Most notable was Microsoft's Tay chatbot - modeled on an an American teenage girl.

Described as "The AI with zero chill", and loosed on the world through the questionable medium of Twitter, Tay quickly become a racist, xenophobic, sex-obsessed Hitler fan. We have no experience with American teenage girls, so are unable to say whether this is typical or not. Either way, Microsoft shut down the bot with extreme prejudice a day later.

ChatGPT is different. You can hold coherent conversations, it can summarise documents, tell you facts, or convincingly pretend to be other people. It's a revelation, and a miracle of the mid-21st century.

Within weeks, the company formerly known as FaceBook revealed that it too had been working on AI, and shortly afterwards, LLaMA was unleashed.

Now, if you need to write a college application essay but have left it until 11.59 on deadline day, you can have one of the many AI web services knock one out in a few seconds; if you need an award-winning photograph or artistic masterpiece, Dall-E or Stable diffusion can generate one in a few minutes.

This writer keeps offline versions of Stable Diffusion, and an uncensored LLaMA model for shits and giggles. It's trivial to give it a persona, ask it a question and have your pet AI answer in character.

AI is flooding the zone with shit

Traffic is the lifeblood of the internet, and the more traffic you can generate, the more money you can make for your shareholders.

Typically, this content is generated by writers, artists, poets, photographers, and other creative types. Generally, you have to pay them to produce the kind of work that draws in visitors.

AI though? AI generated content is free. Sure, you need to pay a subscription for access to the the latest models and reliable service, but if you don't mind joining the queue and putting up with spotty availability, you can create passable content with ease.

And passable is about as good as it gets. Reliable? Not really - we're sure you recall the comedy that ensued when New York lawyer, Steven A Schwartz was asked to research cases for a colleague, and turned to ChatGPT instead. Non-existent cases were cited in court, and the lawyers were publicly bollocked at a special hearing (paywall link).

Using AI to cite case law is high stakes, and comes with high risks - if you're a media outlet, then as long as you're not libeling anyone, it doesn't seem quite so important.

CNET's attempt to write articles using "AI Assist", were highly publicised and they were ridiculed for their mistakes - but CNET was one of the first, it's unlikely to be the last. Most outlets probably won't even acknowledge that they're using AI tools.

Newsquest is the second largest publisher of regional and local newspapers in the UK. It's owned by US media giant Gannett - a company that you might recall was looking to hire a full-time, video forward Taylor Swift Reporter, shortly after laying off nearly 10% of its staff.

In September, Newsquest advertised for an AI-assisted reporter to, "be at the forefront of a new era in journalism, utilising AI technology to create national, local, and hyper-local content for our news brands, while also applying their traditional journalism skills."

The advert was still up as of November 2023, and we look forward to seeing the "AI-assisted" disclaimer when a suitable candidate is eventually hired. We won't though.

What does it mean to be human?

We've seen complete AI-written articles all across the internet. Sometimes we can tell the difference - especially in the tech tutorial space. Even at the biggest publishers, AI-written articles from freelancers, while explicitly forbidden, are tacitly allowed because the relentless production process doesn't encourage editors to pick up on what is clearly (to someone knowledgeable in the field ) machine-written drivel.

Humans bring something more to art and writing than can be produced by a chatbot, and no, we're not going to quote the famous dialogue from I, Robot.

It's hard to quantify or qualify exactly what that something is, but it's easy to find if you read quality independently published blogs on the internet: We'd suggest Ernie Smith's Tedium to find it, or any other well-thought-out one-person publications. In the more mainstream space, you'll see it in The New Yorker, The Markup, and 404 media.

It's excellent writing, and critical thinking from people who clearly know what they're talking about. AI doesn't investigate, nor does it ruminate or have an original thought. It's a probability engine recycling what it's previously been fed. Eventually, AI will end up eating its own shit, but that's a story for another day.

To a casual internet user, reader, or appreciator of art, it's not always obvious what is AI generated content and what is real, human, and authentic. If we're being honest, even before AI, a large proportion of what you read online was content-mill nonsense, but now, even those underpaid dollar-per-day spinners are out of a job.

Alleged Wikipedia editor, middle initial fabricator, fake name lover, and the UK's current Secretary of State for Defence (The Right Honourable Grant Shapps, Member of Parliament for Welwyn Hatfield) used to make his living via article-spinning software - before presiding over an unrelated empire of filth. By all reports, it was so shit that 19 of his companies were banned by Google (do you know how bad you have to be to end up blacklisted by big G?), so in all probability, AI will actually improve the readability of some web content. The best will always stand out, if you can find it.

But that's not the point.

Proclaim (and reclaim ) your humanity

For companies, it will always be desirable to reduce capital costs and maximise profitability. Sure, shovel shit for SEO a la Grant Schapps and countless others. But while it may have, historically, fooled search engines and maximised SEO, it was pretty fucking obvious to a genuine human person (or three dogs in a trenchcoat) that a trash page on a trash site was trash.

It's harder now - AI spews grammatically correct, well-structured, plausible bullshit and there needs to be a way of distinguishing the trash from considered, and curated content.

Looping back to the beginning of this article, there needs to be a badge that says that the writer is at least mostly human.

Where did the badge come from? What's the point? Does it actually mean anything?

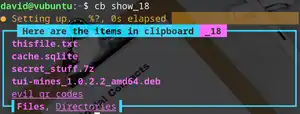

Somewhere on this page you'll see a virtual badge that states, "Written by human not by AI". It proudly proclaims that this writer is human - which is something we've always suspected, but never been able to prove.

We can't prove it now, of course. The badge is a virtual artefact - and like the NFT madness of 2022, there's little to stop anybody, right-clicking it and sticking it on their website. The badge is an SVG file - go on, we dare you.

The fucking thing isn't even trademarked.

But the badge was created by a human, and that means that it's automatically copyrighted to Allen, the founder of Not By AI. There's a license attached, and there are conditions for its use.

Allen recognises that AI is here, and here to stay. He appreciates that AI is used for research*, and for purposes that are not central to the site on which the badge is displayed. That's probably why there are three versions of the badge.

In addition to the "Written by human" badge, you can also grab the "Painted by human" badge if your work is based around artwork, or "Produced by human" for anything else.

It doesn't even mean that your work has to be completely created by a human being - 90% is all Allen asks. That's pretty reasonable.

In fact, for us, that's great. While, the production of this article has involved no AI whatsoever, we do have a monthly AI generated horoscope, purportedly cast by a Psychic cat named "Mystic Mog". It's a low-effort gimmick, and one that we find hilarious. We don't think we're threatening the livelihoods of any genuine professional prognosticators with it. And it's under the 10% mark.

More questionable is our use of AI generated imagery. Again, this isn't particularly prevalent. We've gone overboard with it in this article (by feeding the H2s into an AI image generator) to make a point, but usually, we opt for royalty free images, or whatever we can knock up in GIMP. We use an offline version of Stable Diffusion to create entertaining(ish) images for dry-as-sandpaper topics such as installing Docker Compose, or a tool to monitor Podman deployments. There are only so many Unsplash licensed shipping container images out there, and what the hell does a Podman look like anyway?

That's why we have the "Written by human" badge - we don't have the "Painted by human", the or "Produced by human" badges. We can't claim that.

Anyone familiar with the Not by AI scheme knows pretty much what they're getting here. The articles are written by a human, some of the images may not be. Got it.

According to the license, Not by AI badges are free for non-commercial use, but cost $99 if you're a business.

Currently, Linux Impact makes exactly $0, although we hope that will change (check out our affiliate links and banners!). We emailed Allen, and he said we're fine. He'll be updating the license to better clarify what constitutes commercial or non-commercial usage.

I'm not paying for that!

Good. We hope that's what most of the gigantic, shit-shovelling content mills will say - even as they incorporate more and more AI into their workflow.

The Schapps style operations though? They'll have no qualms about appropriating the badge if they think it will give them more credibility, more SEO juice, more clicks, or more authenticity.

We don't know where Allen's $99 goes. he could be spending it on blackjack, hookers, and rum-based cocktails for all we know.

It's more likely, however, that he supports himself financially while spending long days, checking where the image is used, auditing the content, and preparing a war chest to deal with entities that use his little SVG files where they shouldn't. It'll be cool.

But Not by AI stands a chance - not a huge chance - of improving the web as you use it today. The little badge at the top of the page means that someone, somewhere, has spent their Sunday afternoon wearing their fingers down by grudgingly typing out 1,980 words. It's considered, and they've thought about it. It's not just a tweaked prompt response, based on someone else's work.

That has to mean something, right?

Right?